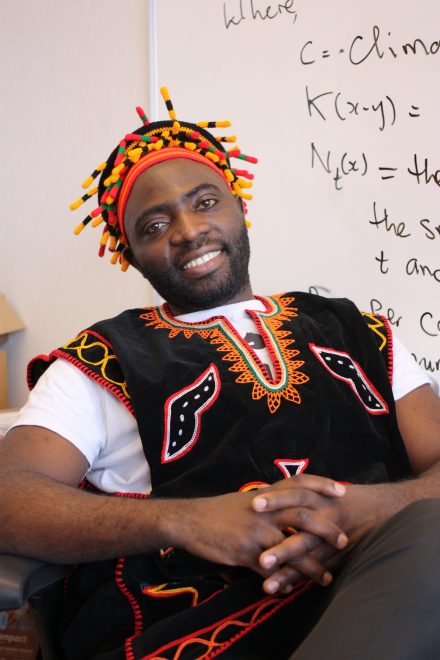

Instructor

Jude Kong

Contact Information

Office: 662

Email: Jude.kong@utoronto.ca

Office Hours: By Appointment

Course Time: Fridays, 9-11:30 am

Course Type: In-person Lectures and hands-on labs

Prerequisites

None

Course Description

Introduction to Data Science offers a comprehensive foundation in the principles, tools, and techniques of modern data analysis. Designed for beginners, the course guides students through the full data science pipeline—from ethical data sourcing and preparation to modeling and interpretation—using Python and its powerful data science libraries. Students will learn to retrieve and clean real-world datasets, perform exploratory data analysis, and apply machine learning methods including regression, classification, and clustering. Emphasis is placed on responsible, explainable, and locally relevant practices. The course also introduces natural language processing through topic modeling and sentiment analysis, equipping students with the practical skills to derive insights from structured and unstructured data. By the end, students will be able to build, evaluate, and communicate data-driven solutions across diverse domains.

Learning Outcomes

By the end of this course, students will be able to:

- Explain and contextualize the role of data science, its ethical foundations, and its relevance across disciplines and real-world applications.

- Demonstrate proficiency in using Python and associated libraries to acquire, clean, transform, and prepare diverse datasets for analysis.

- Apply exploratory data analysis (EDA) to uncover patterns, assess data quality, and generate insights through visualization and statistical reasoning.

- Develop and evaluate predictive models across regression, classification, and ensemble methods, applying best practices for feature engineering, hyperparameter tuning, and explainable AI.

- Implement unsupervised learning approaches such as clustering and dimensionality reduction to discover structure in complex datasets.

- Analyze textual data using natural language processing techniques, including topic modeling and sentiment analysis, to extract and interpret meaning from unstructured sources.

- Integrate ethical, legal, and responsible practices in data handling, model development, and communication of results, with particular attention to fairness, accountability, and local relevance.

- Communicate findings effectively to diverse audiences, using clear narratives, visualizations, and evidence-based interpretations that support decision-making.

Course Evaluation

| Item | Description | Weight | Date due |

| Participation | Active engagement in class discussions and preparation for sessions | 10% | Ongoing |

| Assignment #1 | In Assignment 1, students will be required to identify key variables from academic literature and credible media sources, locate corresponding public datasets, and construct well-documented datasets. These datasets will serve as the foundation for addressing one of the following project topics in subsequent assignments: 1. Impact of Supervised Consumption Site Closures in Toronto: Conduct a census division–level analysis to examine the impact of closing supervised drug consumption sites on overdose incidents and drug-related mortality in Toronto, with the goal of generating evidence to inform policy decisions. 2. Determinants of Measles Transmission Across Canada: Investigate the social, demographic, and environmental factors influencing measles transmission rates at the census division level across Canada. 3. Climate and Environmental Influences on Public Health in Toronto: Explore how climate and environmental variables affect the health and well-being of residents in Toronto, using data at the census division level. Report: To be prepared in the same format as the papers listed below: 1. Nia ZM, Bragazzi NL, Wu J, Kong JD. A Twitter dataset for monkeypox, May 2022. Data in brief. 2023 Jun 1;48:109118. 2. Ahmed H, Cargill T, Bragazzi NL, Kong JD. Dataset of non-pharmaceutical interventions and community support measures across Canadian universities and colleges during COVID-19 in 2020. Frontiers in Public Health. 2022 Nov 17;10:1066654. 3. Kaur M, Bragazzi NL, Heffernan J, Tsasis P, Wu J, Kong JD. COVID-19 in Ontario Long-term Care Facilities Project, a manually curated and validated database. Frontiers in Public Health. 2023 Feb 10;11:1133419. | 15% | Oct 3 |

| Assignment #2 | In Assignment 2, students will conduct Exploratory Data Analysis (EDA) on the dataset they developed in Assignment 1. | 15% | Oct 24 |

| Assignment #3 | In Assignment 3, students will perform data modeling and evaluation using machine learning on the dataset they developed in Assignment 1. | 15% | Nov 07 |

| Assignment #4 | In Assignment 4, students will perform and evaluate clustering analysis (unsupervised learning) on the dataset they developed in Assignment 1. | 15% | Nov 21 |

| Final Project | Final Project: For the Final Project, students will integrate the work from previous assignments—including the dataset from Assignment 1, Exploratory Data Analysis (EDA) from Assignment 2, data modeling and evaluation using machine learning from Assignment 3, and clustering analysis from Assignment 4—to produce a publication-ready manuscript. (Refer to sample readings for formatting guidance.) Students will select one of the following topics: 1. Impact of Supervised Consumption Site Closures in Toronto: Conduct a census-division–level analysis to examine the effect of closing supervised drug consumption sites on overdose incidents and drug-related mortality in Toronto. The goal is to generate evidence that can inform policy decisions. 2. Determinants of Measles Transmission Across Canada: Investigate the social, demographic, and environmental factors influencing measles transmission rates at the census-division level across Canada. 3. Climate and Environmental Influences on Public Health in Toronto: Explore how climate and environmental variables affect the health and well-being of residents in Toronto, using census-division–level data. | 30% (10% Presentation +20% Report) | Dec 05 |

Required texts or readings

I will draw from the following textbooks, alongside relevant papers and my own research, to inform the course content.

Main Textbooks

- Igual, L., & Seguí, S. (2024). Introduction to data science. In Introduction to Data Science: A Python Approach to Concepts, Techniques and Applications. Cham: Springer International Publishing.

- Baig, M. R., Govindan, G., & Shrimali, V. R. (2021). Data Science for Marketing Analytics: A practical

guide to forming a killer marketing strategy through data analysis with Python. Packt Publishing Ltd.

Other Textbooks to Consider

- Nawaz, M. W. Data Science Crash Course for Beginner: Fundamental and practices with python.

- Ou, G., Zhu, Z., Dong, B., & Weinan, E. (2023). Introduction to data science. World Scientific.

- McKinney, W. (2022). Python for data analysis. “O’Reilly Media, Inc.”.

- Grus, J. (2019). Data science from scratch: first principles with python. O’Reilly Media.

Recommended Papers

- Movahedi Nia, Z., Prescod, C., Westin, M., Perkins, P., Goitom, M., Fevrier, K., … & Kong, J. D. (2025). Disproportionate impact of the COVID-19 pandemic on socially vulnerable communities: the case of Jane and Finch in Toronto, Ontario. Frontiers in Public Health, 13, 1448812.

- Nia, Z. M., Prescod, C., Westin, M., Perkins, P., Goitom, M., Fevrier, K., … & Kong, J. (2024). Cross-sectional study to assess the impact of the COVID-19 pandemic on healthcare services and clinical admissions using statistical analysis and discovering hotspots in three regions of the Greater Toronto Area. BMJ open, 14(3), e082114.

- Kaur, M., Bragazzi, N. L., Heffernan, J., Tsasis, P., Wu, J., & Kong, J. D. (2023). COVID-19 in Ontario Long-term Care Facilities Project, a manually curated and validated database. Frontiers in Public Health, 11, 1133419.

- Ahmed, H., Cargill, T., Bragazzi, N. L., & Kong, J. D. (2022). Dataset of non-pharmaceutical interventions and community support measures across Canadian universities and colleges during COVID-19 in 2020. Frontiers in Public Health, 10, 1066654.

- Kong, J. D., Tekwa, E. W., & Gignoux-Wolfsohn, S. A. (2021). Social, economic, and environmental factors influencing the basic reproduction number of COVID-19 across countries. PloS one, 16(6), e0252373.

- Yan, C., Law, M., Nguyen, S., Cheung, J., & Kong, J. (2021). Comparing public sentiment toward COVID-19 vaccines across Canadian cities: analysis of comments on Reddit. Journal of medical Internet research, 23(9), e32685.

- Cheong, Q., Au-Yeung, M., Quon, S., Concepcion, K., & Kong, J. D. (2021). Predictive modeling of vaccination uptake in US counties: A machine learning–based approach. Journal of medical Internet research, 23(11), e33231.

- Bouba, Y., Tsinda, E. K., Fonkou, M. D. M., Mmbando, G. S., Bragazzi, N. L., & Kong, J. D. (2021). The determinants of the low COVID-19 transmission and mortality rates in Africa: a cross-country analysis. Frontiers in public health, 9, 751197.

- Movahedi Nia, Z., Bragazzi, N., Asgary, A., Orbinski, J., Wu, J., & Kong, J. (2023). Mpox panic, infodemic, and stigmatization of the two-spirit, lesbian, gay, bisexual, transgender, queer or questioning, intersex, asexual community: geospatial analysis, topic modeling, and sentiment analysis of a large, multilingual social media database. Journal of Medical Internet Research, 25, e45108.

- Yuh, M. N., Ndum Okwen, G. A., Miong, R. H. P., Bragazzi, N. L., Kong, J. D., Movahedi Nia, Z., … & Patrick Mbah, O. (2024). Using an innovative family-centered evidence toolkit to improve the livelihood of people with disabilities in Bamenda (Cameroon): a mixed-method study. Frontiers in Public Health, 11, 1190722.

- Kazemi, M., Bragazzi, N. L., & Kong, J. D. (2022). Assessing inequities in COVID-19 vaccine roll-out strategy programs: a cross-country study using a machine learning approach. Vaccines, 10(2), 194.

- Olaniyan, T., Christidis, T., Quick, M., Machipisa, T., Sajobi, T., Kong, J., … & Tjepkema, M. (2025). Understanding mortality differentials of Black adults in Canada. Health Reports, 36(4), 3-13.

- Tao, S., Bragazzi, N. L., Wu, J., Mellado, B., & Kong, J. D. (2022). Harnessing artificial intelligence to assess the impact of nonpharmaceutical interventions on the second wave of the coronavirus disease 2019 pandemic across the world. Scientific reports, 12(1), 944.

- Nia, Z. M., Ahmadi, A., Bragazzi, N. L., Woldegerima, W. A., Mellado, B., Wu, J., … & Kong, J. D. (2022). A cross-country analysis of macroeconomic responses to COVID-19 pandemic using Twitter sentiments. Plos one, 17(8), e0272208.

- Ogbuokiri, B., Ahmadi, A., Bragazzi, N. L., Movahedi Nia, Z., Mellado, B., Wu, J., … & Kong, J. (2022). Public sentiments toward COVID-19 vaccines in South African cities: An analysis of Twitter posts. Frontiers in public health, 10, 987376.

- Kong, J. D., Akpudo, U. E., Effoduh, J. O., & Bragazzi, N. L. (2023, February). Leveraging responsible, explainable, and local artificial intelligence solutions for clinical public health in the Global South. In Healthcare (Vol. 11, No. 4, p. 457). MDPI.

- Nia, Z. M., Asgary, A., Bragazzi, N., Mellado, B., Orbinski, J., Wu, J., & Kong, J. (2022). Nowcasting unemployment rate during the COVID-19 pandemic using Twitter data: The case of South Africa. Frontiers in Public Health, 10, 952363.

- Nia, Z. M., Seyyed-Kalantari, L., Goitom, M., Mellado, B., Ahmadi, A., Asgary, A., … & Kong, J. D. (2025). Leveraging deep-learning and unconventional data for real-time surveillance, forecasting, and early warning of respiratory pathogens outbreak. Artificial Intelligence in Medicine, 161, 103076.

- Nia, Z., Bragazzi, N. L., Gizo, I., Gillies, M., Gardner, E., Leung, D., & Kong, J. D. (2025). Integrating Deep Learning Methods and Web-Based Data Sources for Surveillance, Forecasting, and Early Warning of Avian Influenza. Forecasting, and Early Warning of Avian Influenza (May 13, 2025).

- Movahedi Nia, Z., Bragazzi, N. L., Ahamadi, A., Asgary, A., Mellado, B., Orbinski, J., … & Kong, J. D. (2023). Off-label drug use during the COVID-19 pandemic in Africa: topic modelling and sentiment analysis of ivermectin in South Africa and Nigeria as a case study. Journal of the Royal Society Interface, 20(206), 20230200.

- Zhou, X., Nia, Z., Bragazzi, N. L., Gizo, I., Gillies, M., Gardner, E., … & Kong, J. D. (2025). Predicting Avian Influenza Hotspots in the US Using Logistic Regression and Random Forest with Augmented Data via Empirical Distribution Method and Stochastic Variational Inference. Available at SSRN 5211568.

- Pang, O., Nia, Z., Gillies, M., Leung, D., Bragazzi, N. L., Gizo, I., & Kong, J. D. (2024). Analyzing Reddit Social Media Content in the United States Related to H5N1: A Sentiment and Topic Modeling Study. Available at SSRN 5078277.

- Effoduh, J. O., Akpudo, U. E., & Kong, J. D. (2024). Toward a trustworthy and inclusive data governance policy for the use of artificial intelligence in Africa. Data & Policy, 6, e34.

- Tayyem, R., Qasrawi, R., Al Sabbah, H., Amro, M., Issa, G., Thwib, S., … & Kong, J. D. (2025). The impact of digital literacy and internet usage on health behaviors and decision-making in Arab MENA countries. Technology in Society, 82, 102911.

- Forteh, E., Ngemenya, M. N., Kwalar, G. I., Alembong, E. N. N., Tanue, E. A., Kibu, O. D., … & Nsagha, D. S. (2025). Determinants of the use of Digital One Health amongst medical and veterinary students at the University of Buea, Cameroon. medRxiv, 2025-04.

- Nia, Z., Bragazzi, N. L., Gizo, I., Gillies, M., Gardner, E., Leung, D., & Kong, J. D. (2025). Integrating Deep Learning Methods and Web-Based Data Sources for Surveillance, Forecasting, and Early Warning of Avian Influenza. Forecasting, and Early Warning of Avian Influenza (May 13, 2025).

- Ayana, G., Gulilat, H., Lemma, K., Teshome, F., Daba, H., Malik, T., … & Kong, J. D. (2025). A Rapid Evaluation of the Preparedness of Ethiopia’s Disease Surveillance System for Mpox Outbreak: A Cross-Sectional Study of Perspectives from Professionals Across Various Levels. Available at SSRN 5387168.

- Faye, S. L. B., Nkweteyim, D., Sow, G. H. C., Diop, B., Diongue, F. B., Cisse, B., … & Kong, J. (2025). Reimagining Artificial Intelligence for zoonotic disease detection in Africa: a decolonial approach rooted in community engagement and local knowledge. AI and Ethics, 1-28.

- Mbogning Fonkou, M. D., & Kong, J. D. (2024). Leveraging machine learning and big data techniques to map the global patent landscape of phage therapy. Nature Biotechnology, 42(12), 1781-1791.

- Han, Q., Rutayisire, G., Mbogning Fonkou, M. D., Avusuglo, W. S., Ahmadi, A., Asgary, A., … & Kong, J. D. (2024). The determinants of COVID-19 case reporting across Africa. Frontiers in Public Health, 12, 1406363.

- Perikli, N., Bhattacharya, S., Ogbuokiri, B., Nia, Z. M., Lieberman, B., Tripathi, N., … & Mellado, B. (2023). Detecting the presence of COVID-19 vaccination hesitancy from South African twitter data using machine learning. arXiv preprint arXiv:2307.15072.

- Nia, Z. M., Bragazzi, N. L., Wu, J., & Kong, J. D. (2023). A Twitter dataset for monkeypox, May 2022. Data in brief, 48, 109118.

- Lieberman, B., Kong, J. D., Gusinow, R., Asgary, A., Bragazzi, N. L., Choma, J., … & Mellado, B. (2023). Big data-and artificial intelligence-based hot-spot analysis of COVID-19: Gauteng, South Africa, as a case study. BMC Medical Informatics and Decision Making, 23(1), 19.

- Kong, J. D., Fevrier, K., Effoduh, J. O., & Bragazzi, N. L. (2023). Artificial intelligence, law, and vulnerabilities. In AI and Society (pp. 179-196). Chapman and Hall/CRC.

- Daba, H., Dese, K., Wakjira, E., Demlew, G., Yohannes, D., Lemma, K., … & Ayana, G. (2024). Epidemiological Trends and Clinical Features of Polio in Ethiopia: A Preliminary Pooled Data Analysis and Literature Review.

- Ogbuokiri, B., Ahmadi, A., Tripathi, N., Seyyed-Kalantari, L., Woldegerima, W. A., Mellado, B., … & Kong, J. D. (2025). Emotional reactions towards vaccination during the emergence of the Omicron variant: Insights from twitter analysis in South Africa. Machine Learning with Applications, 20, 100644.

- Ebenezer, A., Iyaniwura, S. A., Omame, A., Han, Q., Wang, X., Bragazzi, N. L., … & Kong, J. D. (2025). Understanding Cholera Dynamics in African Countries with Persistent Outbreaks: A Mathematical Modelling Approach.

- Yazdinejad, A., Wang, H., & Kong, J. (2025). Advanced ai-driven methane emission detection, quantification, and localization in canada: A hybrid multi-source fusion framework. Science of The Total Environment, 998, 180142.

- Ogbuokiri, B., Ahmadi, A., Nia, Z. M., Mellado, B., Wu, J., Orbinski, J., … & Kong, J. (2023). Vaccine hesitancy hotspots in Africa: an insight from geotagged twitter posts. IEEE Transactions on Computational Social Systems, 11(1), 1325-1338.

- Ogbuokiri, B., Ahmadi, A., Mellado, B., Wu, J., Orbinski, J., Asgary, A., & Kong, J. (2022, December). Can post-vaccination sentiment affect the acceptance of booster jab?. In International Conference on Intelligent Systems Design and Applications (pp. 200-211). Cham: Springer Nature Switzerland.

Week-by-week breakdown of in-class activities

| Date | Topics | Outcomes |

| Sept 07 | Week 1. Introduction to Data Science 0.1 What is Data Science? 0.2 Why Python for Data Science? 0.3 About this Course 0.4 The Data Science Pipeline: Responsible, Locally Relevant, and Explainable Practices 0.5 Python Installation and Essential Libraries 1. Data Acquisition 1.1 Sourcing and Building Datasets for Data-Driven Research 1.2 Loading Data into Memory 1.3 Sampling and Subsetting Data 1.4 Reading Data from Files | 1. Define Data Science and understand its significance. 2. Explain why Python is a preferred language for Data Science. 3. Navigate the course structure and expectations. 4. Describe the stages of the data science pipeline and explain how principles of responsibility, local relevance, and explainability apply at each stage. 5. Install Python and essential libraries for data analysis. 6. Source variables from literature and media, identify matching public datasets, and build integrated datasets to address a research question. 7. Load, sample, subset, and read data efficiently from various file formats. |

| Sept 14 | Week 2: Social Data / Unconventional Data for Health and Well-Being and APIs 2.1 What is Social Data 2.2 The Social Data Landscape 2.3 How Social Data is Used in Health and Well-Being Research 2.4 Basics of APIs: Endpoints, Keys, and Rate Limits 2.5 Accessing Data via RESTful APIs (e.g., Reddit API, GDELT, Facebook API, Wikipedia API) 2.6 Getting Reddit Posts 2.7 Getting Google Trends Data 2.8 Getting Wikipedia Trends Data 2.9 Getting Google News Data 2.10 Getting GDELT Data 2.11 Getting Facebook Posts 2.12 Getting Satellite Air Quality Data 2.13 Getting Weather Data 2.14 Other Sources of Social Data 2.15 Ethical and Legal Considerations of API Usage | 1. Define and describe social data and its role in public health and well-being research. Identify key sources of social and unconventional data relevant to health contexts. 2. Understand and explain the structure and function of APIs, including endpoints, keys, and rate limits. 3. Demonstrate the ability to access and retrieve data using RESTful APIs such as Reddit, GDELT, Facebook, Wikipedia, Google Trends, and others. 4. Retrieve unconventional data types such as weather patterns, satellite air quality data, and news trends. 5. Critically assess the ethical and legal implications of using APIs and social data in research. |

| Sept 21 | Week 3: Data Preparation Introduction to Pandas for Data Preparation 3.1.1 The DataFrame: structure and basics 3.1.2Previewing data 3.1.3 Renaming columns and indices for clarity 3.2 Combining Data 3.2.1 Concatenating DataFrames 3.2.2 Merging DataFrames 3.2.3 Joining DataFrames 3.2.4 Combining Data 3.3 Data Cleaning and Transformation 3.3.1Removing unwanted data and duplicates 3.3.2 Handling missing or invalid data 3.3.3 Handling outliers 3.3.4 Data mapping and replacement 3.3.5 Discretization and binning 3.4 Data Type Handling and Conversion 3.4.1 Understanding and checking data types 3.4.2 Converting data types 3.4.3 Working with categorical data (label encoding, one-hot encoding) 3.5 Working with Dates and Time Series 3.5.1.Parsing dates 3.5.2 Extracting date components (year, month, day) 3.5.3 Sorting and indexing by datetime 3.6 Feature Engineering Basics 3.6.1 Creating new features from existing data 3.6.2 Applying functions 3.6.3 Grouping and aggregation Selection and Subsetting of Data 3.7.1 Selecting columns and rows 3.7.2 Boolean indexing and filtering 3.7.3 Conditional selection 3.8 Introduction to Text Cleaning 3.8.1 Basic string methods (lowercasing, trimming) 3.8.2 Removing special characters and punctuation | 1. Use Pandas to explore and manipulate DataFrames, including previewing data, renaming columns, and combining datasets through concatenation, merging, and joining. 2. Clean and transform data by handling missing values, duplicates, outliers, and invalid entries, as well as applying data mapping, discretization, and binning techniques. 3. Understand and manage data types, including converting types and working with categorical variables using label encoding and one-hot encoding. 4. Work effectively with dates and time series data by parsing dates, extracting components, and indexing or sorting by datetime. 5. Perform basic feature engineering, including creating new features, applying functions, and performing grouping and aggregation. 6. Select, subset, and filter data using column/row selection, Boolean indexing, and conditional selection. 7. Conduct introductory text cleaning using string methods to prepare textual data for analysis. |

| Sept 28 | Week 4: Exploratory Data Analysis (EDA) 4.1 Descriptive Statistics and Summary Measures: 1. Mean, median, mode 2. Variance, standard deviation 3. Range, interquartile range (IQR) 4. Skewness and kurtosis 5. Correlation and covariance 4.2 Visualizing Data 1. Histograms – visualize frequency and distribution shape 2. Box plots – examine spread, central tendency, skewness, and outliers 3. Line plots – trends over time 4. Scatter plots – relationships between two variables 5. Bar charts – compare categories 6. Pie charts – proportional data 7. Pair plots – relationships across multiple variables 8. Heatmaps – visualize correlation matrices and dense data grids 4.3 Investigating Distribution Shape and Assumptions 4.3.1 Investigating the Shape of Distributions Visual tools: Histograms, Box plots Statistical tests for normality: Shapiro–Wilk test, D’Agostino–Pearson test, Kolmogorov–Smirnov test 4.3.2. Testing Statistical Assumptions: Normality; Homoscedasticity (equal variance across groups): Levene’s test; Independence of features 4.3.3 Comparing Distributions Across Groups: Mann–Whitney U test; Wilcoxon signed-rank test; Kruskal–Wallis test; t-test; ANOVA 4.4. Feature Selection and Dimensionality Reduction: 1. Manual and automated feature selection: Correlation-based selection, Mutual information, Recursive feature elimination (intro) 2. Dimensionality reduction techniques: Principal Component Analysis (PCA) | 1. Compute and interpret descriptive statistics, including measures of central tendency, dispersion, skewness, kurtosis, correlation, and covariance. 2. Visualize data effectively using histograms, box plots, line plots, scatter plots, bar charts, pie charts, pair plots, and heatmaps. 4. Investigate the shape of data distributions and assess statistical assumptions, including normality, homoscedasticity, and independence of features, using appropriate visual and statistical tests. 5. Compare distributions across groups using parametric and non-parametric tests (t-test, ANOVA, Mann–Whitney U test, Wilcoxon signed-rank test, Kruskal–Wallis test). 6. Perform feature selection and preliminary dimensionality reduction using correlation-based methods, mutual information, recursive feature elimination, and principal component analysis (PCA). 7. Apply exploratory data analysis techniques to extract insights and prepare datasets for subsequent modeling and analysis. |

| Oct 05 | Week 5: Data Modeling and Evaluation Using Machine Learning 5.1 Introduction to Machine Learning 5.1.1 Basic terminology (features, labels, training vs. test data, model, algorithm, prediction, evaluation) 5.1.2. Overview of the machine learning workflow (data → model → evaluation → deployment) 5.2. Types of Machine Learning Approaches 5.2.1 Supervised learning 5.2.2 Unsupervised learning 5.2.3 Semi-supervised learning 5.2.4 Reinforcement learning 5.3 Machine Learning Model Types 5.3.1 Discriminative (Predictive) models (e.g., Logistic Regression, SVM, Decision Trees, Random Forests) 5.3.2 Generative models (e.g., Naive Bayes, Gaussian Mixture Models, Generative Adversarial Networks – GANs) 5.4. Preparing Data for Modeling 5.4.1 Data normalization and standardization 5.4.2 Splitting data into training, validation, and test sets 5.4.3 Cross-validation techniques (e.g., K-fold, stratified K-fold) 5.5. Model Evaluation and Metrics 5.5.1 Error types: bias, variance 5.5.2 Evaluation metrics: 1. For classification: accuracy, precision, recall, F1-score, ROC-AUC 2. For regression: MSE, RMSE, MAE, R² 5.5.3 Loss functions and cost functions (MSE, cross-entropy, hinge loss) 5.6 Learning and Optimizing Model Parameters 5.6.1 Hyperparameters vs. learned parameters 5.6.2 Grid search and randomized search 5.7. Feature Engineering 5.7.1 Feature selection: filter, wrapper, embedded methods 5.7.2 Feature extraction: PCA 5.6 Model Challenges 5.6.1 Underfitting and overfitting 5.6.2 Bias-variance tradeoff 5.6.3 Addressing imbalanced datasets Introduction to Explainable AI 5.7.1.Importance of interpretability and transparency 5.7.2 Basic model interpretability techniques 5.7.3 Ensuring models are responsible, locally relevant, and explainable | 1. Explain fundamental machine learning terminology, including features, labels, training and test data, models, algorithms, predictions, and evaluation metrics. 2. Distinguish between different categories of machine learning models, including discriminative (predictive) and generative AI. 3. Compare and apply different learning paradigms: supervised, unsupervised, semi-supervised, and reinforcement learning. 4. Design a model development workflow, including training, validation, cross-validation, and addressing the bias-variance tradeoff. 5. Evaluate machine learning models using appropriate error, loss, and cost functions, and interpret classification and regression metrics (accuracy, precision, recall, F1-score, ROC-AUC, MAE, MSE, RMSE, R²). 6. Apply feature engineering techniques, including feature selection and extraction (e.g., PCA), to improve model performance. 7. Identify and mitigate challenges in model generalization, such as underfitting and overfitting, and apply regularization and hyperparameter tuning strategies. 8. Explain the importance of model interpretability and transparency, and implement basic explainable AI (XAI) techniques . 9. Ensure machine learning models are responsible, locally relevant, and interpretable throughout the modeling and evaluation process. |

| Oct 12 | Thanksgiving Day/Reading week (University closed) | |

| Oct 19 | Week 6: Supervised Learning — Regression Variable Selection for Regression 6.1.1 Detecting Multicollinearity 6.1.2 Strategies to Address Multicollinearity 6.1.3 Sequential Feature Selection Methods: Forward, Backward, and Bidirectional Approaches Building a Linear Regression Model 6.2.1 Splitting Data into Training and Test Sets 6.2.2 Building and Training the Model 6.2.3 Hyperparameter Tuning and Optimization 6.2.4 Making Predictions with the Model Evaluating Regression Models 6.3.1 Performance Metrics: MAE, MSE, RMSE, R² 6.3.2 Residual Analysis and Assumption Checking | 1. Identify relevant variables for regression analysis and detect multicollinearity in datasets. 2. Apply strategies to address multicollinearity and perform sequential feature selection using forward, backward, and bidirectional approaches. 3. Build and train linear regression models using training and test datasets. 4. Optimize model performance through hyperparameter tuning and make accurate predictions. 5. Evaluate regression models using key metrics (MAE, MSE, RMSE, R²) and conduct residual analysis to verify model assumptions. |

| Oct 26 | Week 7: Supervised Learning — Classification 7.1 Key Classification Methods 7.1.1 Logistic Regression 1. Model development and prediction 2. Model evaluation using confusion matrix, ROC curve, and AUC 3. Hyperparameter tuning and optimization 7.1.2 Nearest Neighbor Classification (KNN) 1. Model development and prediction 2. Model evaluation using confusion matrix, ROC curve, and AUC 3. Hyperparameter tuning 7.1.3 Naïve Bayes Classification 1. Model development and prediction 2. Model evaluation using confusion matrix, ROC curve, and AUC 3. Handling categorical and continuous features 4. Hyperparameter optimization | 1. Understand key classification methods (Logistic Regression, K-Nearest Neighbor, Naïve Bayes) and their underlying assumptions. 2. Develop and implement predictive classification models using real-world datasets. 3. Evaluate classification performance using appropriate metrics. 4. Apply hyperparameter tuning and optimization techniques to improve model performance. 5. Handle different data types effectively, including categorical and continuous features, within classification models. 6. Compare strengths, limitations, and use casesof Logistic Regression, KNN, and Naïve Bayes classifiers. 7. Select and justify the most appropriate classification approach for a given data science problem. |

| Nov 02 | Week 8: Decision Trees and Ensemble Methods 8.1 Decision Trees (Classification and Regression Trees – CART) 1. Building and training a decision tree model 2. Visualizing decision tree structure and decision rules 3. Model prediction and interpretability 4. Evaluation using confusion matrix, ROC curve, and classification metrics 5. Pruning techniques to avoid overfitting 6. Hyperparameter tuning (e.g., max depth, min samples split, min samples leaf) 8.2 Ensemble Methods: Random Forests 1. Introduction to ensemble learning and bagging 2. Model development using random forests 3. Feature importance ranking and interpretation 4. Prediction and evaluation (confusion matrix, ROC curve, AUC) 5. Comparison with individual decision trees 6. Hyperparameter optimization (e.g., number of trees, max features, bootstrap sampling) 8.3 Gradient Boosting Machines (GBM) 1. Introduction to boosting and its difference from bagging 2. How gradient boosting works: sequential learning and residual errors 3. Model development using Gradient Boosting (e.g., using XGBoost) 4. Evaluation using appropriate classification metrics 5. Regularization and overfitting control in boosting 6. Hyperparameter tuning (learning rate, number of estimators, max depth) 8.4 Comparing Tree-Based Models 1. Decision Trees vs. Random Forests vs. Gradient Boosting 2. Performance trade-offs: accuracy, interpretability, speed 3. When to use each method | 1. Build and evaluate decision tree models for both classification and regression, applying pruning and hyperparameter tuning to improve performance. 2. Develop and interpret ensemble models (Random Forests and Gradient Boosting), including feature importance analysis. 3. Compare bagging and boosting approaches, understanding their strengths, weaknesses, and when to apply each. 4. Apply appropriate evaluation metrics (confusion matrix, ROC curve, AUC, and classification metrics) to assess model performance. 5. Select the most suitable tree-based method (Decision Trees, Random Forests, or Gradient Boosting) based on trade-offs in accuracy, interpretability, and computational efficiency. |

| Nov 09 | Week 9: Unsupervised Learning: Clustering 9.1 Introduction to clustering and its applications 9.2 K-Means Clustering 9.2.1 Concept and intuition 9.2.2 Choosing the number of clusters (elbow method, silhouette score) 9.2.3 Strengths and limitations of K-means 9.3. Hierarchical Clustering 9.3.1 Agglomerative vs. divisive methods 9.3.2 Dendrograms and linkage criteria (single, complete, average) 9.3.4 Choosing the optimal number of clusters 9.3.5 Comparing to K-means | 1. Understand the principles, applications, and types of clustering in unsupervised learning. 2. Apply K-Means clustering, including selecting the number of clusters and interpreting cluster assignments. 3. Perform hierarchical clustering, interpret dendrograms, and choose appropriate linkage criteria. 4. Compare and contrast K-Means and hierarchical clustering, including strengths, limitations, and use cases. 5. Evaluate clustering results using appropriate metrics (e.g., silhouette score, elbow method). |

| Nov 16 | Week 10: Further Avenues in Data Science 10.1. Topic Modeling 10.1.1. Introduction to topic modeling and its applications (e.g., summarizing documents, analyzing public discourse, literature reviews) 10.1.2 Overview of unsupervised learning in NLP 10.1.3 Techniques: Latent Dirichlet Allocation (LDA), Non-negative Matrix Factorization (NMF) 10.1.4 Preprocessing for topic modeling: 1. Tokenization, stop word removal, lemmatization 2. Vectorization using TF-IDF or count vectorizers 10.1.5 Interpreting and visualizing topics (e.g., pyLDAvis) 10.2. Sentiment Analysis 10.2.1 Overview and real-world applications (social media, reviews, political sentiment) 10.2.2 Text preprocessing: Lowercasing, punctuation removal, stop word filtering 10.2.3 Feature extraction: Bag of Words (BoW), TF-IDF;Word embeddings (intro to Word2Vec/GloVe) 10.2.4 Methods: Rule-based sentiment (e.g., VADER, TextBlob); Machine learning-based sentiment classification 10.2.5 Model evaluation: precision, recall, F1-score, accuracy | 1. Understand the principles and applications of topic modeling and its role in uncovering themes in textual data. 2. Preprocess text data and implement topic modeling techniques such as LDA and NMF, including interpreting and visualizing topics. 3. Understand the fundamentals and applications of sentiment analysis in real-world contexts (social media, reviews, political sentiment). 4. Preprocess text for sentiment analysis, extract features (BoW, TF-IDF, word embeddings), and apply both rule-based and machine learning-based sentiment classification. 5. Evaluate the performance of sentiment analysis models using appropriate metrics (precision, recall, F1-score, accuracy). |

| Nov 23 | Project presentations | |

| Nov 30 | Project presentations |